这里演示在Windows上运行示例

配置Hosts

在Windows的Hosts文件夹中加入集群的所有节点

1.1.1.1 master

2.2.2.2 slave1

配置Hadoop

- 复制已在集群上安装的Hadoop文件到本地。文件比较多,可以压缩后复制

从master上复制

# 压缩文件夹

cd /opt/

zip -r hadoop.zip /hadoop

# 将/opt/hadoop.zip复制到本地

-

解压hadoop.zip到本地某目录,注意:目录中不能有空格

-

编辑

hadoop\etc\hadoop\hadoop-env.cmd中的JAVA_HOME

:: 首先在cmd里找到JAVA_HOME

echo %JAVA_HOME%

:: => D:\Program_Files\Semeru\jdk-17.0.1.12-openj9\

:: 编辑hadoop-env.cmd中的JAVA_HOME

:: =>

JAVA_HOME=D:\Program_Files\Semeru\jdk-17.0.1.12-openj9\

- 配置HADOOP环境变量

在系统变量中加入

HADOOP_HOME=hadoop_path_here

HADOOP_USER_NAME=root

在path中加入

%HADOOP_HOME%\bin

%HADOOP_HOME%\sbin

-

将winutils复制进

hadoop\bin中 -

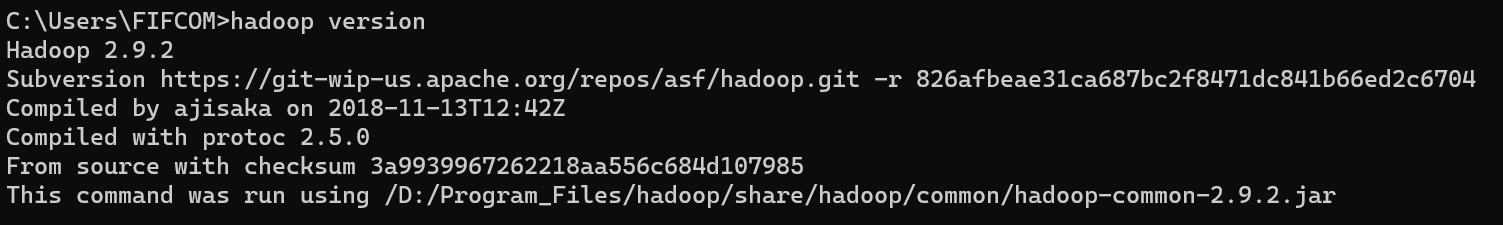

测试

hadoop version,正常则成功

创建测试数据

在hbase shell中:

create 'scores','grade','course'

put 'scores','1001','grade:','1'

put 'scores','1001','course:art','80'

put 'scores','1001','course:math','89'

put 'scores','1002','grade:','2'

put 'scores','1002','course:art','87'

put 'scores','1002','course:math','57'

put 'scores','1003','course:math','90'

# 查看插入的数据

scan "scores"

创建工程

这里用到的IDE是eclipse

- 下载master上的hbase/lib

cd /opt/hbase

zip -r lib.zip lib/

# hdfs dfs -put lib.zip /

-

将lib文件夹放入工程目录,并选中lib下的和lib\client-facing-thirdparty的所有jar文件,右键->build path->add to path

-

创建包demo,创建类Main

package demo;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.*;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.util.Bytes;

import java.io.IOException;

public class Main {

public static void main(String[] args) {

System.setProperty("hadoop.home.dir", "D:\\Program_Files\\hadoop");

Configuration connection= HBaseConfiguration.create();

Admin admin;

try {

connection.addResource("hbase-site.xml");

// 创建数据库链接

Connection conn = ConnectionFactory.createConnection(connection);

admin = conn.getAdmin();

System.out.println("===开始连接到HBase上并显示所有表===");

for (TableName tn : admin.listTableNames())

System.out.println(tn);

System.out.println("服务器测试成功!");

Table tb = conn.getTable(TableName.valueOf("scores"));

ResultScanner scanner=tb.getScanner(new Scan());//全表扫描

for(Result row : scanner){

for (Cell cell : row.listCells()) {

System.out.println("Rowkey:" + Bytes.toString(row.getRow()) +

" Familiy:"+ Bytes.toString(CellUtil.cloneFamily(cell)) +

" Qualifier:"+ Bytes.toString(CellUtil.cloneQualifier(cell)) +

" Value:"+ Bytes.toString(CellUtil.cloneValue(cell)));

}

}

conn.close();// 关闭连接

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}

- 在工程根目录创建hbase-site.xml

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://master:9000/hbase</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<property>

<name>hbase.master.port</name>

<value>16000</value>

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>master:2181,slave1:2181</value>

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/opt/apache-zookeeper-3.8.0-bin/data</value>

</property>

<property>

<name>hbase.unsafe.stream.capability.enforce</name>

<value>false</value>

</property>

</configuration>

- 在根目录创建log4j.properties,只展示error日志。可以改成info或者warn

log4j.rootLogger=error,console

log4j.appender.console=org.apache.log4j.ConsoleAppender

log4j.appender.console.layout=org.apache.log4j.PatternLayout

log4j.appender.console.layout.ConversionPattern=%d %p [%c] - %m%n

- 运行Main.java,如果能正常读出写入的数据即成功