Hive默认不支持数据的修改和删除,需编辑配置文件hive-site.xml以允许

<property>

<name>hive.support.concurrency</name>

<value>true</value>

</property>

<property>

<name>hive.enforce.bucketing</name>

<value>true</value>

</property>

<property>

<name>hive.exec.dynamic.partition.mode</name>

<value>nonstrict</value>

</property>

<property>

<name>hive.txn.manager</name>

<value>org.apache.hadoop.hive.ql.lockmgr.DbTxnManager</value>

</property>

<property>

<name>hive.compactor.initiator.on</name>

<value>true</value>

</property>

<property>

<name>hive.compactor.worker.threads</name>

<value>1</value>

</property>

并配置thrift的账号(root)密码(root)

<property>

<name>hive.server2.thrift.client.user</name>

<value>root</value>

</property>

<property>

<name>hive.server2.thrift.client.password</name>

<value>root</value>

</property>

<property>

<name>hive.server2.authentication</name>

<value>NONE</value>

</property>

环境准备

- 启动Hadoop

./opt/hadoop/sbin/start-all.sh

- 启动HiveServer2

cd /opt/hive/bin/

./hive --service hiveserver2 &

连接到HiveServer2

创建一个Maven项目,添加依赖项目

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.9.2</version> <!-- 与服务器Hadoop版本对应 -->

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId> <!-- jdbc -->

<version>2.3.9</version> <!-- 与服务器Hive版本对应 -->

<scope>provided</scope>

</dependency>

</dependencies>

在src/main/java/下创建软件包api,并在其下创建Main类

连接到HiveServer2并展示所有数据库

package api;

import java.sql.*;

class HiveAPI {

Connection conn;

HiveAPI() {

try {

Class.forName("org.apache.hive.jdbc.HiveDriver"); // 加载驱动

conn = DriverManager.getConnection("jdbc:hive2://master:10000", "root", "root"); // hive2的默认端口是10000

} catch (Exception e) {

e.printStackTrace();

}

}

public void showDB() {

// 查看数据现有的数据库

try {

PreparedStatement ps = conn.prepareStatement("show databases");

ResultSet rs = ps.executeQuery();

for (int i = 1; rs.next(); i++) {

System.out.println(i + "\t" + rs.getString(1));

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

public class Main {

public static void main(String[] args) {

HiveAPI api = new HiveAPI();

api.showDB();

}

}

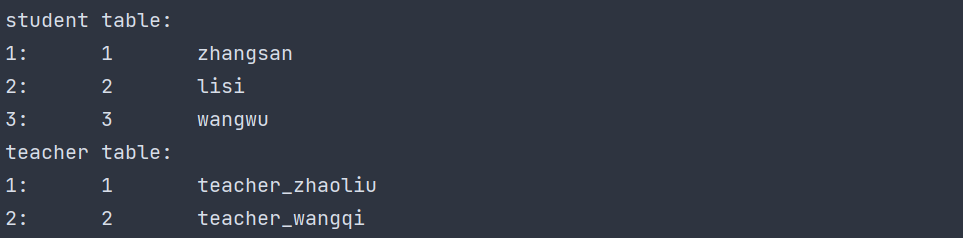

在curd数据库中有表:

- student表

| id | name |

|---|---|

| 1 | zhangsan |

| 2 | lisi |

| 3 | wangwu |

查询数据

使用select * from db.tb来查询数据。因此需传入数据库名称和表名称

class HiveAPI {

Connection conn;

HiveAPI() { ... }

public void select(String db, String tb) {

try {

String sql = "select * from " + db + "." + tb;

PreparedStatement ps = conn.prepareStatement(sql); // 构建查询语句对象

ResultSet rs = ps.executeQuery(); // 执行查询,返回结果集

for (int i = 1; rs.next(); i++) {

// 使用rs.getString(n)来获取某列的数据

System.out.println(i + ":\t" + rs.getString(1) + "\t" + rs.getString(2));

}

} catch (Exception e) {

e.printStackTrace();

}

}

}

public class Main {

public static void main(String[] args) {

HiveAPI api = new HiveAPI();

System.out.println("student table:");

api.select("curd", "student");

System.out.println("teacher table:");

api.select("curd", "teacher");

}

}

插入数据

使用insert into db.tb values(data[0], data[1])来插入数据

class HiveAPI {

Connection conn;

HiveAPI() { ... }

public void select(String db, String tb) { ... }

public void insert(String db, String tb, String[] data) {

try {

// 生成插入语句

String sql = "insert into " + db + "." + tb + " values(";

for (String x : data) {

sql += "'" + x + "',";

}

sql = sql.substring(0, sql.length() - 1);

sql += ")";

PreparedStatement ps = conn.prepareStatement(sql); // 构建查询语句对象

ps.execute(); // 执行查询

} catch (Exception e) {

e.printStackTrace();

}

}

}

public class Main {

public static void main(String[] args) {

HiveAPI api = new HiveAPI();

// 向student表中插入4, insert

api.insert("curd", "student", new String[]{"4", "insert"});

api.select("curd", "student"); // 查看是否插入成功

}

}

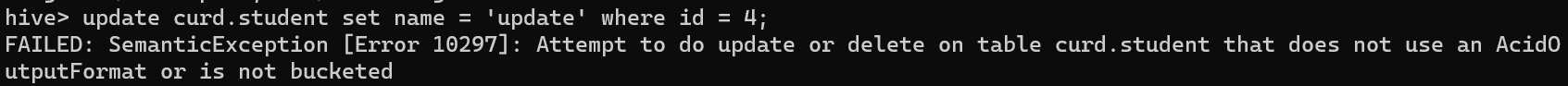

更新数据

Hive默认不支持数据的更新、删除,需修改Hive的配置文件。即便修改了配置文件,也仅能对事务表进行更新、删除。

对普通表进行更新仍会报错

创建事务表curd.txn,并插入数据

use curd;

create table txn( id int, name string)

clustered by (id) into 2 buckets stored as orc

tblproperties ("transactional"="true");

insert into txn values(1,'zhangsan');

insert into txn values(2,'lisi');

insert into txn values(3,'wangwu');

使用update db.tb set name = 'new_name' where id = 3来更新数据

class HiveAPI {

Connection conn;

HiveAPI() { ... }

public void select(String db, String tb) { ... }

public void update(String db, String tb, String id, String new_name) {

try {

String sql = "update " + db + "." + tb + " set name = '" + new_name + "' where id = " + id;

PreparedStatement ps = conn.prepareStatement(sql); // 构建删除语句对象

ps.setObject(1, id); // 绑定变量值

ps.executeUpdate(); // 执行更新操作

} catch (Exception e) {

e.printStackTrace();

}

}

}

public class Main {

public static void main(String[] args) {

HiveAPI api = new HiveAPI();

System.out.println("before update");

api.select("curd", "txn");

api.update("curd", "txn", "3", "update");

System.out.println("after update");

api.select("curd", "txn");

}

}

删除数据

同样的,删除操作仅能在事务表中执行。使用delete from db.tb where id = 3来删除

class HiveAPI {

Connection conn;

HiveAPI() { ... }

public void select(String db, String tb) { ... }

public void delete(String db, String tb, String id) {

try {

String sql = "delete from " + db + "." + tb + " where id = " + id;

PreparedStatement ps = conn.prepareStatement(sql); // 构建更新语句对象

ps.setObject(1, id); // 绑定变量值

ps.executeUpdate(); // 执行更新操作

} catch (Exception e) {

e.printStackTrace();

}

}

}

public class Main {

public static void main(String[] args) {

HiveAPI api = new HiveAPI();

System.out.println("before delete");

api.select("curd", "txn");

api.delete("curd", "txn", "3");

System.out.println("after delete");

api.select("curd", "txn");

}

}